Best Practices for Efficiently Testing Simulink Models

In Model-based Development (MBD), Embedded Software Engineers create software systems with the help of model visualization and analysis. As the complexity of these models grows and the need to meet safety standards increases, the testing procedure becomes more time-consuming. Therefore, conducting extensive tests on these models before generating code minimizes the risk of failure in safety-critical systems. This blog article summarizes some best practices and techniques to help you test your models efficiently in an MBD process.

1. Hierarchical Testing

In model-based development, model elements are structured in a hierarchy, which represents the relationships and dependencies between different components or entities within the model (usually subsystems or statecharts). This hierarchy helps to define the overall architecture and behavior of the system.

The ability to test each subsystem independently can significantly impact testing efficiency. This approach not only speeds up testing but also helps identify weaknesses in individual subsystems, ultimately making the entire system more robust. By testing these units for requirements using functional test cases, the entire process becomes much more resilient.

From an AUTOSAR standpoint, hierarchical testing is even more important, as runnables are organized hierarchically within a software component. This approach allows for individual testing of runnables based on their inputs and outputs, making the testing process more streamlined. Breaking down testing into manageable parts improves overall efficiency and helps identify and address potential problems in a more detailed manner.

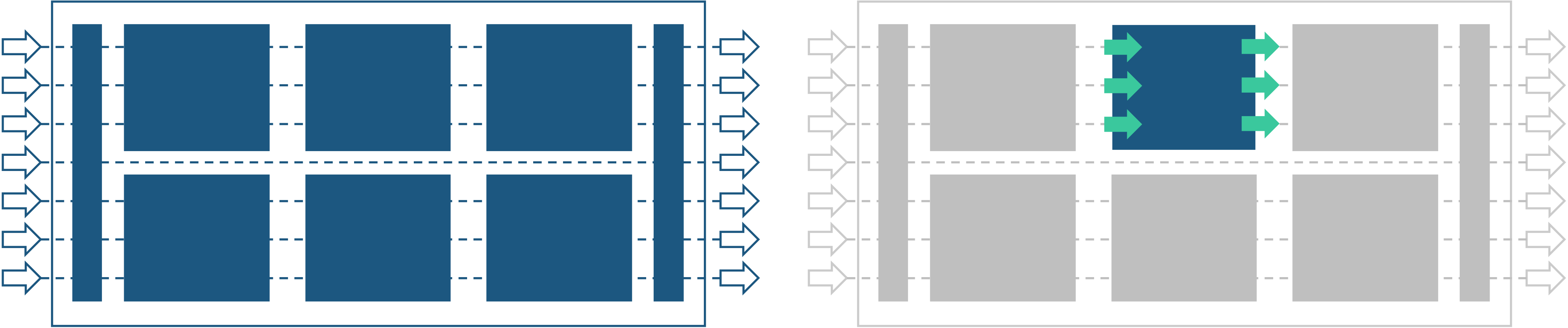

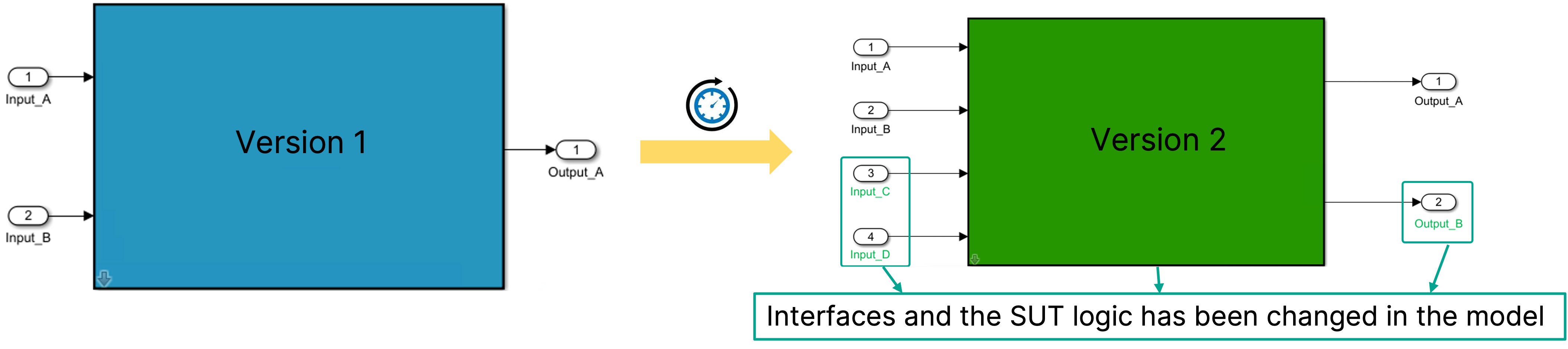

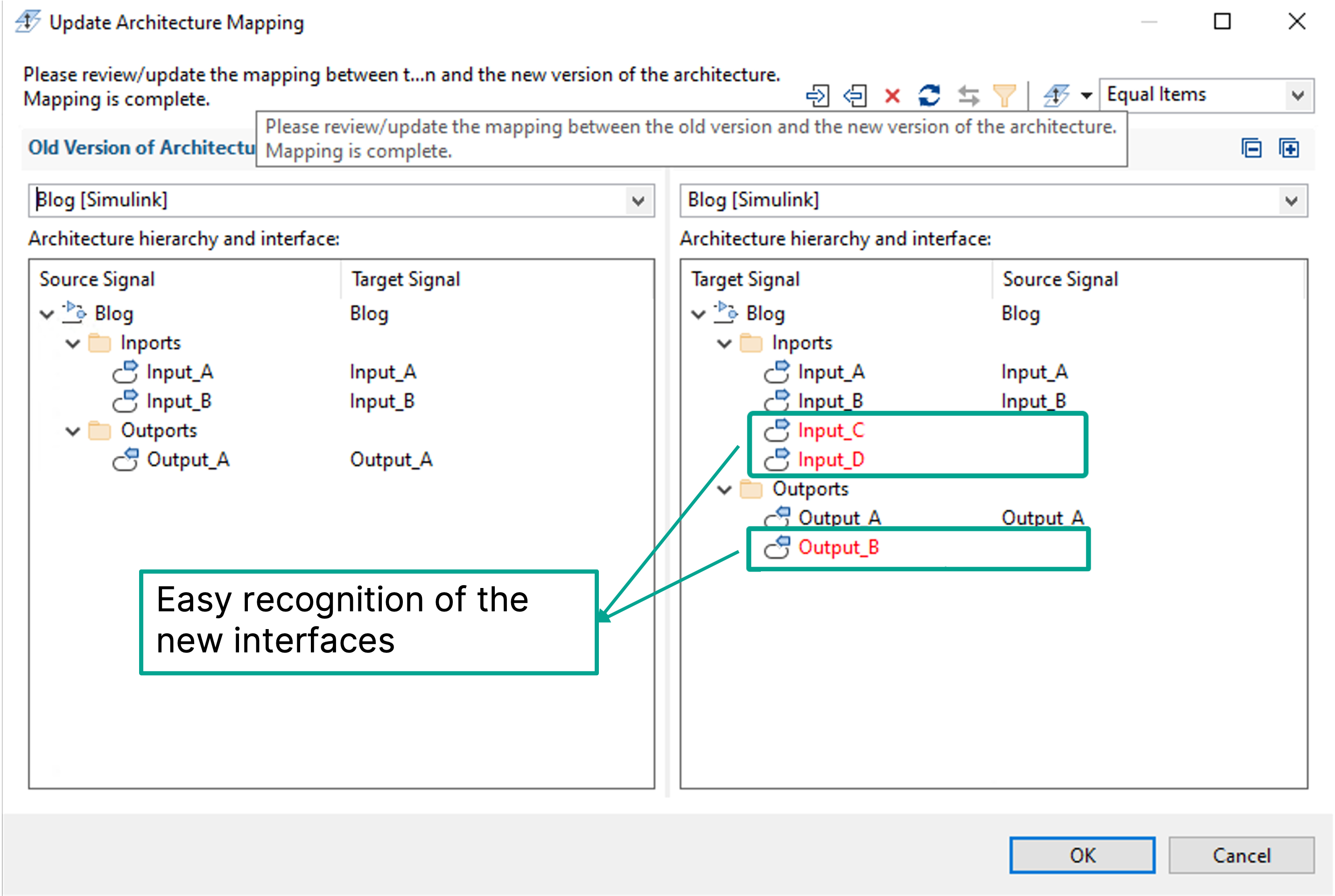

2. Adapting Tests to Interface Changes of the Model

During the development process, it is common for the model and its interface to undergo changes. This can include modifications to interface names, the addition of new interface elements, or the removal of existing interface elements. But does this mean we have to manually adapt all our test cases? The better solution is to automatically adapt the test profile and the test cases to these changes. This approach allows for easy and quick adjustments to existing test cases, ensuring they stay relevant as the model evolves. Plus, you can reuse the test cases you’ve already created, saving time and effort. Importantly, this method gives full control to the tester, ensuring that any changes made are intentional and don’t lead to unwanted alterations in the background.

By leveraging tools like BTC EmbeddedPlatform, any test interface or logical changes can seamlessly be integrated into the testing workflow with the click of a button, saving valuable time in the process.

3. Debugging failed test cases

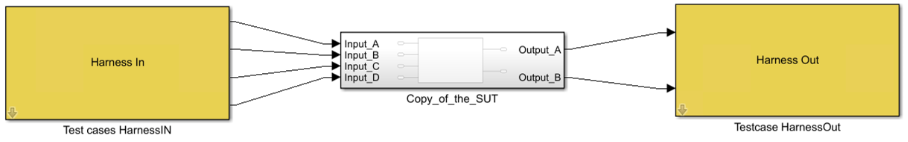

Let’s start with one recommendation: Refrain from debugging directly on the original model. Instead, conduct testing and the debugging on the System Under Test (SUT) using a self-contained copy of the model that includes input and output harnesses. This approach offers an efficient and flexible debugging environment, enabling developers to isolate and thoroughly examine specific elements that may be causing test case failures. By utilizing a self-contained model, the debugging process becomes more streamlined and focused, facilitating targeted issue resolution.

Moreover, the creation of a customized sandbox environment enhances debugging capabilities by enabling controlled experimentation to replicate and analyze the failure conditions of a test case in any environment. This approach minimizes unexpected errors arising from tool dependencies and speeds up the debugging process. Plus, it also makes it easy to share the debug environments with colleagues or customers.

You can learn more about debugging with BTC EmbeddedPlatform here.

4. How to be ISO 26262 complaint during the testing process

Within a safety-critical project, ISO 26262 tool qualification is only necessary for certain tools. Ensuring compliance with ISO 26262 in model-based testing is essential for maintaining the reliability and safety of systems, particularly in safety-critical applications. Thoroughly evaluating the Tool Confidence Level (TCL) is a critical aspect of ensuring compliance. To determine if tool qualification is needed, ISO 26262 outlines a simple two-step process for assessing the required confidence level in a specific tool. If no confidence is necessary, the lowest tool confidence level (TCL 1) is sufficient, and tool qualification is not required. Test tools, such as the EmbeddedPlatform, typically require a high level of confidence and, therefore, tool qualification. While this process can be costly and time-consuming, a tool pre-qualification from authorities like TÜV can significantly reduce the qualification efforts on the user’s end. This blog article from Markus Gros explains more on when and how to qualify tools according to ISO 26262

Executing test cases at the Model-in-the-loop and Software-in-the-loop levels can often be a time-consuming process. ISO 26262, in Chapter 9.4.5, outlines a critical testing methodology that suggests “conducting software unit testing at the Model level, followed by a Back-to-Back Test between the model and the object code.” An effective approach to satisfy this need is to automate the Back-to-Back test using CI/CD.

5. How to test the untestable?

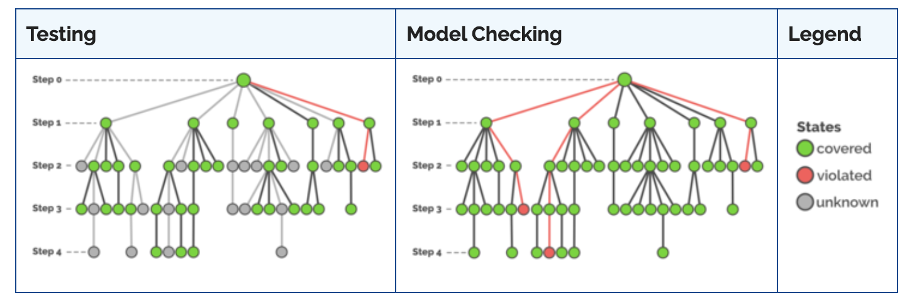

Usually, functional test cases are derived from requirements in order to test if the system shows the desired behavior described by the requirement. But what about those type of requirements which state that some undesired situation should never occur? In order to fulfill the requirement, there must NOT be a test case that brings about the situation; in other words, it’s impossible to write a test case for this type of requirement. However, we can still prove it using Model Checking technology.

Model Checking is a part of Formal Methods, which helps with formal specification, development, verification, and theorem proving. It generates a mathematical proof to verify your system-under-test. Model checking technology is essential for testing by exploring and analyzing the design, structure, and logic of the model.

This interview between Wolfgang Meincke and Karsten Scheibler explains the importance of model checking technology in improving the quality assurance process, identifying and resolving errors, and creating more robust systems.

Conclusion

Efficient testing is crucial in Model-based Development (MBD) to ensure the reliability and safety of software systems, particularly in safety-critical applications. This blog highlights key strategies for effective testing, including the significance of hierarchical testing for improved system robustness, adapting to dynamic model changes, debugging on a System Under Test (SUT) with a self-contained model, and addressing ISO 26262 compliance during the testing process. The article also emphasizes the importance of automating Back-to-Back tests using CI/CD and introducing Model Checking as a vital tool to test the untestable parts of the code, providing a mathematical proof to verify system-under-test reliability.