In the last decades, Model-based development (MBD) has considerably improved the development of embedded software in the automotive industry. Although the MBD methodology increases the efficiency of the development process, there are some technical challenges which function developers often experience. One example is the handling of external variables during software unit test. In this blog article, we’ll discuss how to solve this challenge for unit test of TargetLink code.

Motivation and challenges

Complex software applications often require to have a modular software architecture as recommended by standards like ISO 26262. While splitting the software architecture into smaller units (e.g. of recommended modular model architecture), the ownership of interface variable definitions becomes distributed across the units. Maybe one unit is responsible for the definition of its own output variables or in some cases the definition of the interface variables is delegated to one specific component (e.g. shared Calibrations variables or IO variables of all units). This type of architectural design is even more prominent in distributed development process (e.g. with supplier) where one party is responsible for the data it provides.

For a software unit test and MIL vs. SIL back-to-back test, this can lead to big challenges: For compilation purpose, the definition of the non-owned variable is of course needed. One solution could be, to include the real external production code file in the unit test compilation process. But this often leads to problems, as this file can again have other dependencies and headers which are either not available or hardware dependent. Another issue is that tests are usually executed on model level (MIL) and code level (SIL/PIL). By including external files with variables having arbitrary initialization values, we can introduce differences between model and code.

Another solution could be, to let our code generator generate these variables together with the production code but then we have an error prone process to get rid of these extra-variables before the software integration phase.

In the development tool dSPACE TargetLink, the external variables can be automatically generated by the code generator, but without becoming part of the production code. This enables a smooth unit test of TargetLink code. Let’s have a look at how this is done.

How to manage external variables for unit test of TargetLink code

Create a dedicated variable class and a module

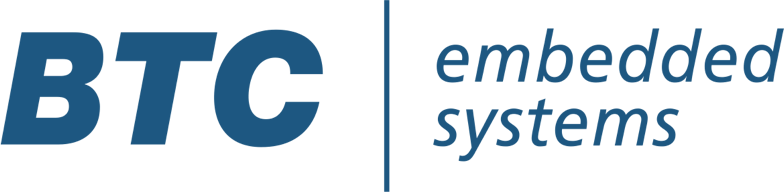

Let’s consider a model that contains shared calibration variables defined in another component and simply used in this function. In the TargetLink Data Dictionary, we will first create a dedicated variable class:

- Start by duplicating the existing “CAL” class and renaming it however you like

- Reference this variable class for all shared calibration variables

- Create a module object which has the name of the “real” external file that holds the variable definitions.

- Reference this module in your variable class

With this configuration, the model generates the c-code which uses these variables and the code includes the right header file for the declarations. However, the “external” code files will also be generated in the production code folder, which is something we don’t want.

Define a module ownership

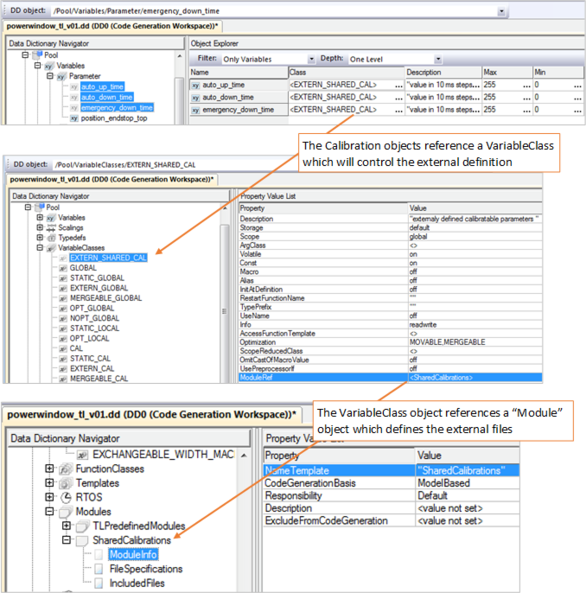

Using the “module ownership” feature, we can solve this:

- Create a “module owner” element

- Specify a system name for the module ownership. This can basically be any string but not the name of your TargetLink code generation unit. For a Data Dictionary which is shared across the project, it might be the name of the TargetLink model which is actually responsible for these variables

- Reference the module that you created before

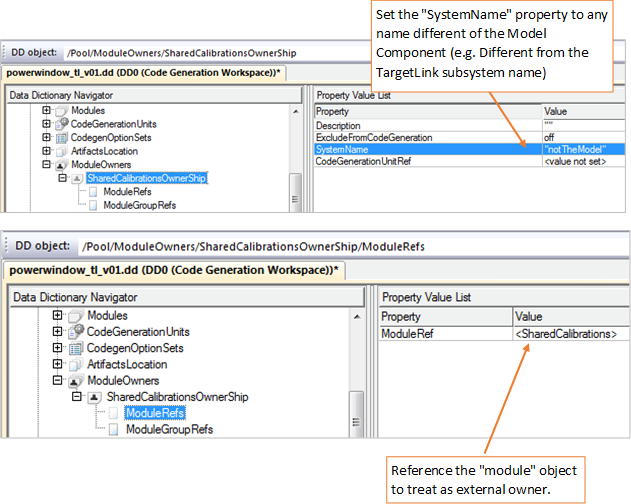

As an outcome of this configuration, TargetLink creates a different folder to store these files instead of putting them in the actual production code folder. The files are so-called stub code and they are differentiated from the actual source files . It is interesting to notice that the production code hasn’t changed after this configuration and the software unit is ready to be tested.

Conclusion

Configuring TargetLink with the “ModuleOwnerShip” mechanism enables an efficient and automated handling of external variables for unit test of TargetLink code. The unit source code and the stub code are created automatically in separate folders and becomes ready to be compiled for the unit test. MIL and SIL simulations can use synchronized data properties such as datatype and init value. Thus, the user can focus on the actual testing activities, for example with BTC EmbeddedTester, and finally after the unit test, get rid of the stub code and integrate the unit source code with the actual external variables source code during the software integration phase.

It’s good to notice that AUTOSAR compliant software considerably reduces the need of external variables. Indeed, as all AUTOSAR interfaces are managed (i.e. defined) in the AUTOSAR Run-Time Environment (RTE), the user can rely on the development tools to handle the AUTOSAR semantics automatically. For instance, TargetLink provides a self-contained RTE frame which can be appropriately tested in BTC EmbeddedTester.