Creating different variants of a software component is an efficient way of reusing existing and developed features for different versions of a specific component or controller. Thus, testing variants is an ubiquitous task in the automotive industry that needs to be taken into account during testing.

How are variants created in a Model-based Software development process?

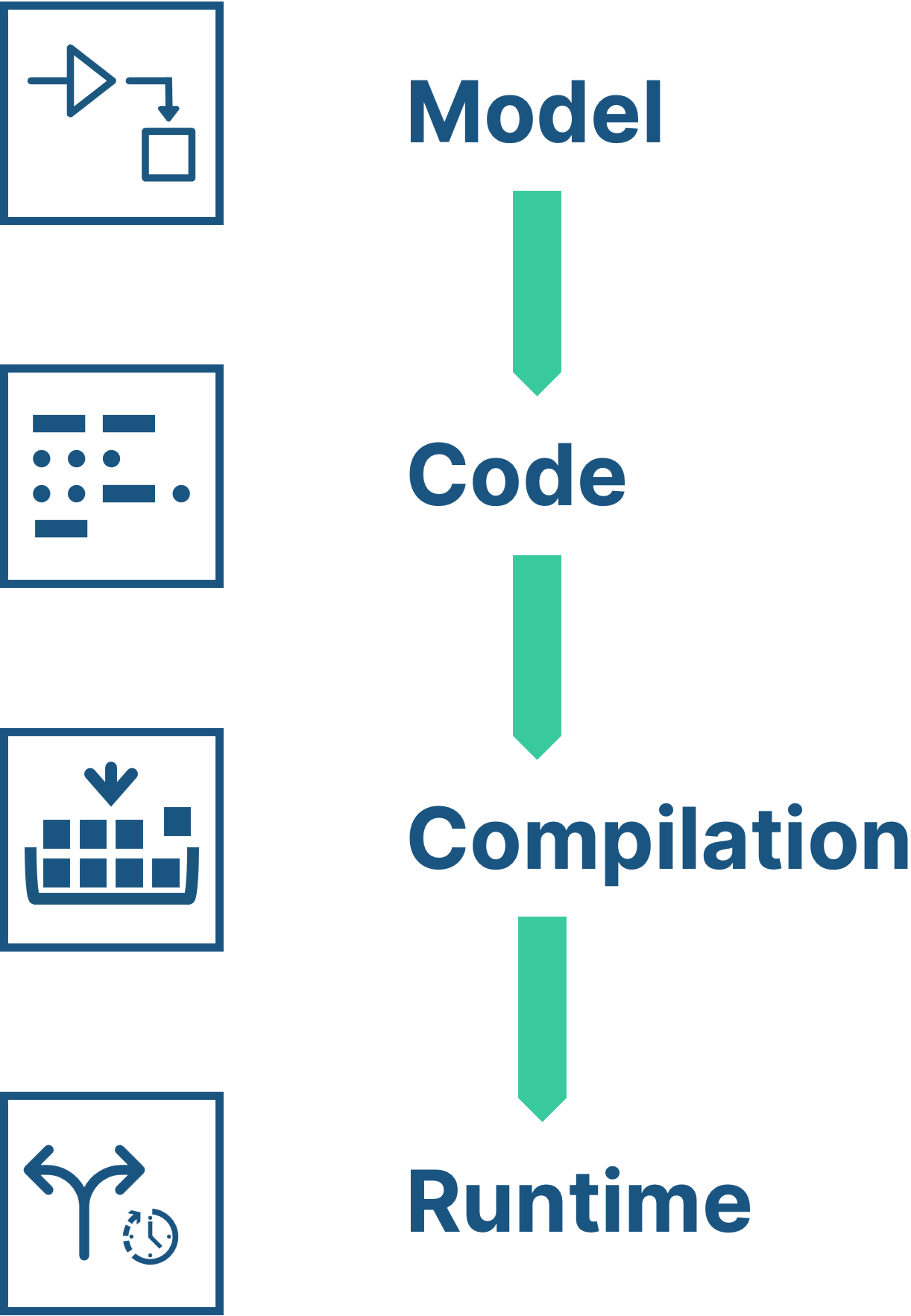

When looking at the development process, we can distinguish between different moments in which the decision for a concrete variant gets “frozen”:

- During Model construction: The variant binding is realized by model adaptation. Only the active variant is visible in tools like Simulink or TargetLink. In this way, the model and the generated code are tailored to the current variant configuration.

- During Code generation: All variants are present in the model, but only one variant is considered during code generation. As above, the generated code contains only one variant

- During Compilation: The variant configuration is done before compilation by preprocessor macros. The C Code contains all variants, but the binary code (=object code) reflects the variant configuration.

- At runtime: The variant is selected at or before runtime, i.e. by changing a calibration variable or by configuring a piece of configuration memory. In this case, the same binary code is used for all variants.

From the code perspective, variants 1 and 2 are identical. For variants 3 and 4, you will have one code file but for variant 3 the variant will be fixed during compilation while for variant 4 it can be modified later by setting the appropriate calibration values (even during runtime).

What does this mean for testing variants?

First things first. Before talking about the different aspects to consider during testing, let’s define the term ‘test project’. A test project in the context of this article contains:

- The model from which the production code will be generated

- A simulation environment to drive the inputs and calibration values as well as read the internal measurement points and outputs.

- A clear interface definition for the used signals

- Test data

- Reporting

- Optionally, requirements

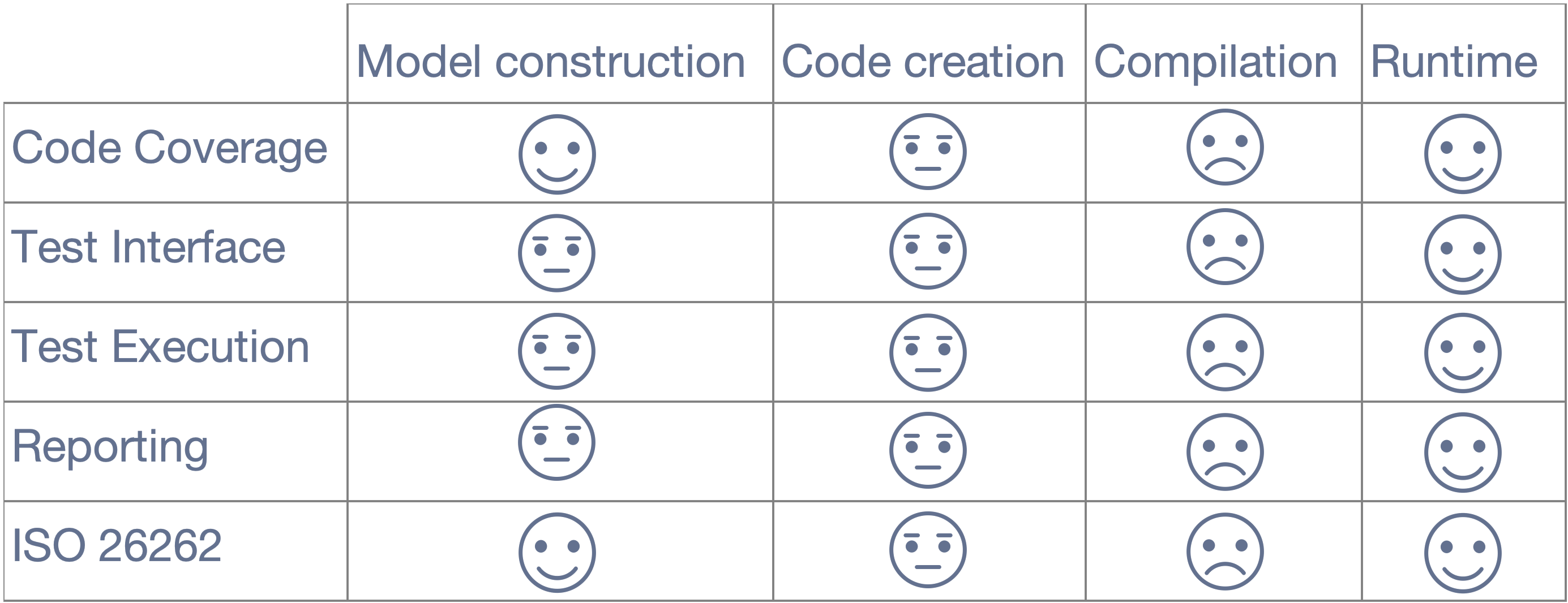

Next let’s have a look at the constraints we have depending on the different binding times and also discuss the advantages or disadvantages.

1. During model construction time

If defining the variants in separate models you will derive a dedicated model and the corresponding code for each variant.

Code Coverage

The derived or handwritten code represents one variant, this means that you should derive full code coverage to test the variant completely. This also applies for the model coverage and the code is a concurrent representation of the model.

Test Interface

While looking at one variant, the test interface between model and code will be the same which enables an easy handling of test data for both instances and the test data can be reused for both as well. Apart from that this might not apply for other variants and the reusability of test data might suffer.

Test Execution

The constraints from the test interface has a direct link to the execution records. At a first glance this seems to be a simple task as well because the test interfaces of model and code match and therefore you can simulate the test data on both instances, however, in the context of variants, you will have several models/code for each instance and the reusability of the test data becomes difficult if the interface differ between the instances.

Reporting

The reporting is also included in the previous limitations. You will get a separat report for each variant.

ISO 26262

The ISO 26262 will be fulfilled by this approach. One model will be tested against its derived code and there are no unexecuted parts on one of the instances.

This approach is useful if you have big differences between variants and it makes sense to keep things separate on that level. However, keep in mind that on implementation level this are not variants anymore but independent models/code.

2. During code generation time

The next approach of handling variants is to represent the different variants in the model but the code will one handle one variant. When talking about handwritten code or a code only view, the same implications are valid then for the „during model construction time“ approach. This changes if we consider a model-based development and testing approach.

Code Coverage

The code represents one variant and therefore must be fully tested but you will not be able to derive full coverage on model level with the created test data, because the model represents additional variants.

Test Interface

Depending on the variants, the test interface on model level might have more inputs than on code level for a specific variant.

Test Execution

The reuse of test cases might become tricky since you either have missing interface elements or you must get rid of some or both.

Reporting

This use case will also create one report for each variant, because the code is handled separately and only reflects the handling of one variant but does not fully represent the model part, of course.

ISO 26262

It would certainly be going too far to say that this approach is not ISO compliant but for a model-based development and testing workflow this approach is not recommended since model and code differ which makes especially the reporting complex and more difficult to follow.

When looking at handwritten code you should keep in mind that you have the same constraints like the „during model construction time“ approach.

3. During compilation

To avoid getting separate models and code for each variant, you may use pre-processor #defines to distinguish between the different variants. Since you have only one version of the model and code, it reduces the effort to maintain different variants and things that will be used for all variants only need to be developed once. This also may bring up the conclusion that you can also test all variants within one test project. While this might apply for static test methods, things become tricky for dynamic testing.

Code Coverage

Most important, you will not be able to measure code coverage with high confidence. If supported at all, the code coverage measurement is a patchwork quilt of the different variants and does not reflect the parts of the code from the different variants separately. This might even lead to ambiguous results. And which code coverage should be shown? The one of a single variant, a subset of variants, or all of them? And most importantly: The fact that some code is covered in one variant doesn’t mean it can be covered in other variants. This means, showing the absence of unreachable code in each variant is only possible by creating separate test projects.

Test Interface

Things become even more complex if the interfaces of the variants are different. One variant might have more interface objects than another one. This brings additional challenges to the handling and writing of test data.

Test Execution

For the simulation, each variant must be compiled before-hand. In the worst case scenario, this happens before each test execution. The test cases must be adapted to the corresponding interface and if running them on both model and code, the test case must in addition carry the data to turn on the correct variant on the model level.

Reporting

Like the Code Coverage, a report covering all variants is not meaningful regarding the simulated software, which you finally want to test. This means you will generate all reports (Code Coverage, Test Execution, Interface, Back-to-Back Testing Results, etc.) separately for each variant.

Debugging

When it comes to debugging your code, what you see is actually not what you get. During debugging, you will look at the C Code but the test execution happens on the compiled object code. And it’s not always easy, to identify the code elements which belong (or don’t belong) to a certain variant.

Consequences

Even though it might be technically possible to manage all the test data and different interfaces, execution records, reports, etc. within one test project, it will be hard to keep track of all the different artifacts. Also, minor changes to the code affecting a single variant will impact the whole test project. As much as I understand the idea of why you want to put all the variants in one test project, it makes things complex and error-prone.

If you cannot avoid this approach, our recommendation is to create a separate test project for each variant. Please, also read the article Living in the #ifdev Hell from Dr. Florian Deißenböck and the whitepaper The Love-Hate Relationship with the C Preprocessor: An Interview Study.

4. At runtime

In this case you will have one model/code version and only one compiled version but keep the flexibility of binding at compiling time. There are still all variants within the test project, however, the different variants are handled by the calibration data.

Code Coverage

The code represents all variants available. You will derive full code coverage and full model coverage for all of them and the test data applies to all variants. This also considers the model coverage in addition.

Test Interface

The test interface is stable and variants are only handled by calibration parameters that can be different in each test case. The interface matches between both, model and code.

Test Execution

One execution of all test cases will cover all variants implemented and can be executed on both levels (model and code).

Reporting

You will only get one report for all variants.

ISO 26262

This approach is fully ISO 26262-compliant AND considers all variants in one report.

This approach is useful because it also considers a valid and good handling of the project from a testing and ISO 2626 perspective. One could argue that this approach might increase memory consumption and execution time on the controller. But looking at today’s controllers, the memory and computation capacities are much larger than ever before. And if it is really about each bit, byte, and macrosecond, I recommend anyway to go with “binding at model or code level” approach.

Conclusion

“Binding at model construction time” will lead to a project setup, in which each variant is independent on model-level, code-level, and then also for the test project.

“Binding at model construction time” will lead to a project setup, in which each variant is independent on model-level, code-level, and then also for the test project.

“Binding at code generation time” allows you to test all variants together on the model level, followed by an independent Back-to-Back test per variant. If we want to test model and code together, we’d setup independant test projects for each variant.

When talking about variants from a testing point of view, “Binding at compilation time” brings up the most constraints and makes the management of a test project very complex. In the end, it usually leads to a workflow that is similar to the one mentioned above for “Binding at code generation time”.

From a test perspective, “Binding at runtime” seems to be the most convenient method for testing software variants. Compared to other approaches, it enables you to easily manage all variants in one test project. With a single model/code version and compiled code, variants can be managed through calibration data within a single test project. This approach reduces complexity, ensures a stable interface, and enables comprehensive reporting.

If you find this blog article useful, you might also be interested in my blog article about Why Back-to-Back Testing is still needed for floating-point.